Scheduling with Priorities

Contents

10.3. Scheduling with Priorities#

We first discuss scheduling with fixed priorites for real time, and then how priorities can be used dynamically to support an interactive system.

10.3.1. Fixed Priority#

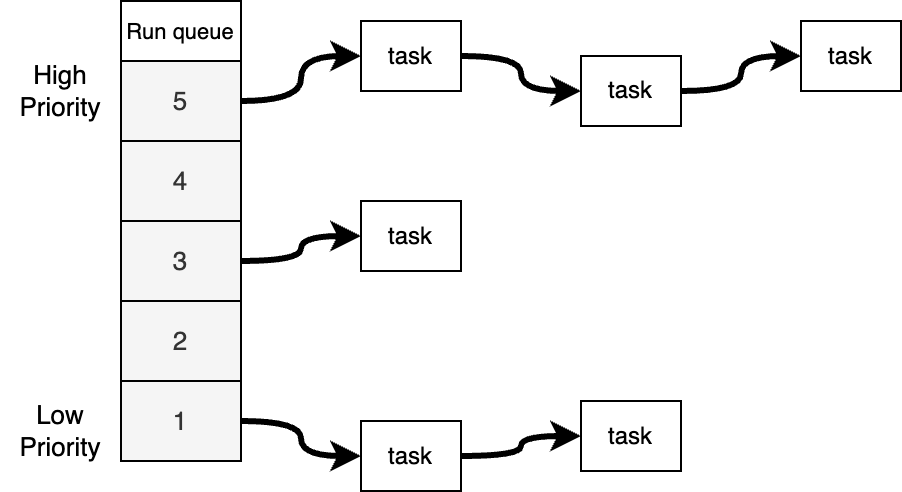

The core idea behind priority scheduling is that some processes may be more important than others and should be given access to the CPU first. For example, one reasonable assumption might be that tasks of students should only run if there are no tasks from professors. To implement this policy, the OS maintains two or more priority queues which hold tasks assigned to the corresponding priority. Runnable processes in a lower priority queue are only run if there are none in a higher priority queues. Figure Fig. 10.8 shows a simple example of a system with 4 priority queues. In this snapshot, the next process to be given CPU time will be the first process in the priority 4 queue. Assuming no additions, the processes in queue 3 will not run until all of the ones in 4 have completed.

Fig. 10.8 A simple example of a system with 5 priority queues and runnable processes in several of the queues.#

Let us consider a situation with three tasks shown in the table below:

Task |

Start |

Runtime |

Priority |

|---|---|---|---|

A |

0 |

6 |

1 |

B |

1 |

2 |

3 |

C |

2 |

2 |

3 |

D |

3 |

1 |

5 |

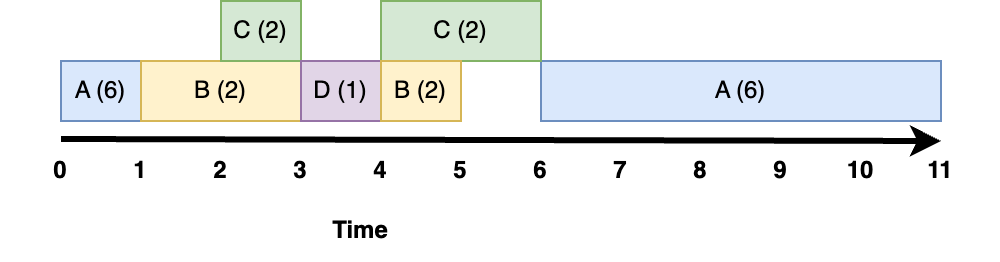

In that case, a priority scheduler will run tasks as shown in Fig. 10.9 below:

Fig. 10.9 Priority scheduling, where every task executes for a quantum of time.#

A runs by itself until B becomes ready to run. We then preempt A after it has accumulated one second of run time, and context switch to B since it is higher priority. When C starts running, it is the same priority as B, so they share the CPU (i.e., we switch back and forth between them every quantum). At time 3, D enters with the highest priority, and we stop running B and C to just run it. At that point, B has accumulated 1.5 sec of runtime, and C has accumulated .5 seconds. Once D is done, we again share the CPU using round robin between B and C, until B has accumulated its full 2 seconds of runtime, after which we can use the CPU just for running C. Finally, at second 6, all the other tasks are done and A, the lowest priority task, can be run until it completes. The average turnaround time here is \(((4-3) + (5-1) + (6-2) + 11)/4 = 5\) seconds.

Note, one challenge with a fixed priority scheme is that low priority work can starve if there is always higher priority work entering the system.

10.3.1.1. Tradeoffs#

Requirements:

User must specify priority of high priority work

The Good:

Supports real time for high priority

The Bad:

Bad for tasks that alternate between I/O and CPU

Doesn’t do anything to maintain high utilization of non-CPU resources

Poor turnaround time

Wouldn’t use for batch

Can result in Starvation

10.3.2. Variable Priority or Multilevel feedback#

While round robin does a better job than run to completion it doesn’t do a good job for I/O bound tasks. If there are many CPU intensive tasks. Consider the case of 100 CPU intensive tasks that each consume their full quantum of, say, 10msec. If the I/O intensive task only runs for a few usec, and then does some quick I/O, it might have to wait for \(10msec * 100 = 1 sec\) before it gets to run again. What we really want to do is to prioritize I/O intensive tasks. Unfortunately, we don’t know which tasks are CPU or I/O intensive a-priori, and further a task may go back and forth between being CPU and I/O intensive.

A very popular scheduling algorithm for this is called Multilevel feedback. It assumes the same kind of data structure shown in Fig. 10.9. One simple varient of the algorithm is:

When a task first starts running, we assume it is I/O intensive, and add it to the highest priority queue.

if a task consumes an entire quantum, we assume it is more CPU intensive, and decrease its priority by one before adding it to the runqueue.

If a task performs I/O before its quantum expires, when it becomes runnable we increase its priority (up to the highest level) before adding it to the runqueue.

To avoid starvation, we periodically move all the tasks to the highest priority queue.

Note, a system can support both fixed priorities, e.g., for real time tasks, and a range of priorities for dealing with general purpose tasks.

10.3.2.1. Tradeoffs#

Requirements:

requires no knowledge of task run time

The Good:

Good for interactive tasks and maintaining high utilization of non-cpu resources

The Bad:

Bad for real time and batch

While it can deal with starvation by periodically moving tasks to high priority queue, this is a bit of a hack rather than an intrinsic part of the algorithm.