Operating System Structure & Unix/Linux

Contents

3. Operating System Structure & Unix/Linux#

We first discuss why we focus on Linux in this book, and then discuss how systems like Linux are structured and how the core services are provided. The next chapter describes some of the key abstractions those services provide to applications.

3.1. Unix and Linux#

There are hundreds of operating systems that have been developed over the years, many of which are designed to hide most aspects of the computer from “normal” users. Those users mainly just want to use programs written by others. The interface of these operating systems tends to focus on graphics and visually oriented ways of interacting with programs.  Unix, on the other hand, assumes that its users are primarily programmers. Its design and collection of tools are not meant to be particularly user-friendly. Rather Unix is designed to allow programmers to be very productive and to support a broad range of programming tools.

Unix, on the other hand, assumes that its users are primarily programmers. Its design and collection of tools are not meant to be particularly user-friendly. Rather Unix is designed to allow programmers to be very productive and to support a broad range of programming tools.

While most elements of this book apply to most operating systems, for a number of reasons we typically focus on Unix, and, in particular, the Linux open source variant of Unix:

Even many operating systems like MacOS and Android, have a form of Unix or Linux at their core. If you want to really understand these systems, you need to understand Unix.

The UNIX operating system was built by master programmers who valued programmability and productivity. In some sense learning to work on a Unix system is a right of passage that not only teaches you how to be productive on a computer running a Unix operating system, but also teaches you to think and act like a programmer.

Unix is designed with a core principle of small programs that can be composed through file system interfaces. You are encouraged to write little re-usable programs that you incrementally evolve as needed and combine with others to get big tasks done.

Unix’s programming-oriented nature leads to an environment in which almost anything about the OS and user experience can be customized and programmed. Unix makes automation the name of the game – largely everything you can do manually can be turned into a program that automates the task.

Linux, the dominant Unix today, is open source, enabling programmers and researchers to go deep into the code. Anyone can modify the operating system to support their system, or to enable their new workload.

Unix/Linux’s programming-friendly nature has led to the development of a very large and rich body of existing software, with contributions from researchers, industry, students and hobbyists alike. This body of software has come to be a large scale shared repository of code, ideas, and programs that modern applications rely on heavily. As a result, the computer servers that form the core of the Internet and the Cloud largely run Linux. Many of the computers embedded in the devices that surround us from wifi routers, medical devices, automobiles, and everything else also often run a version of Linux. In fact, Android is, at its core, Linux.

Unix recently celebrated its 50th year Anniversary.

50 Years of Unix

The people and its history are a fascinating journey into how we have gotten to where we are today.

The Strange birth and long life of Unix

UNIX and its children literally make our digital world go around and will likely continue to do so for quite some time.

3.2. OS Structure and services#

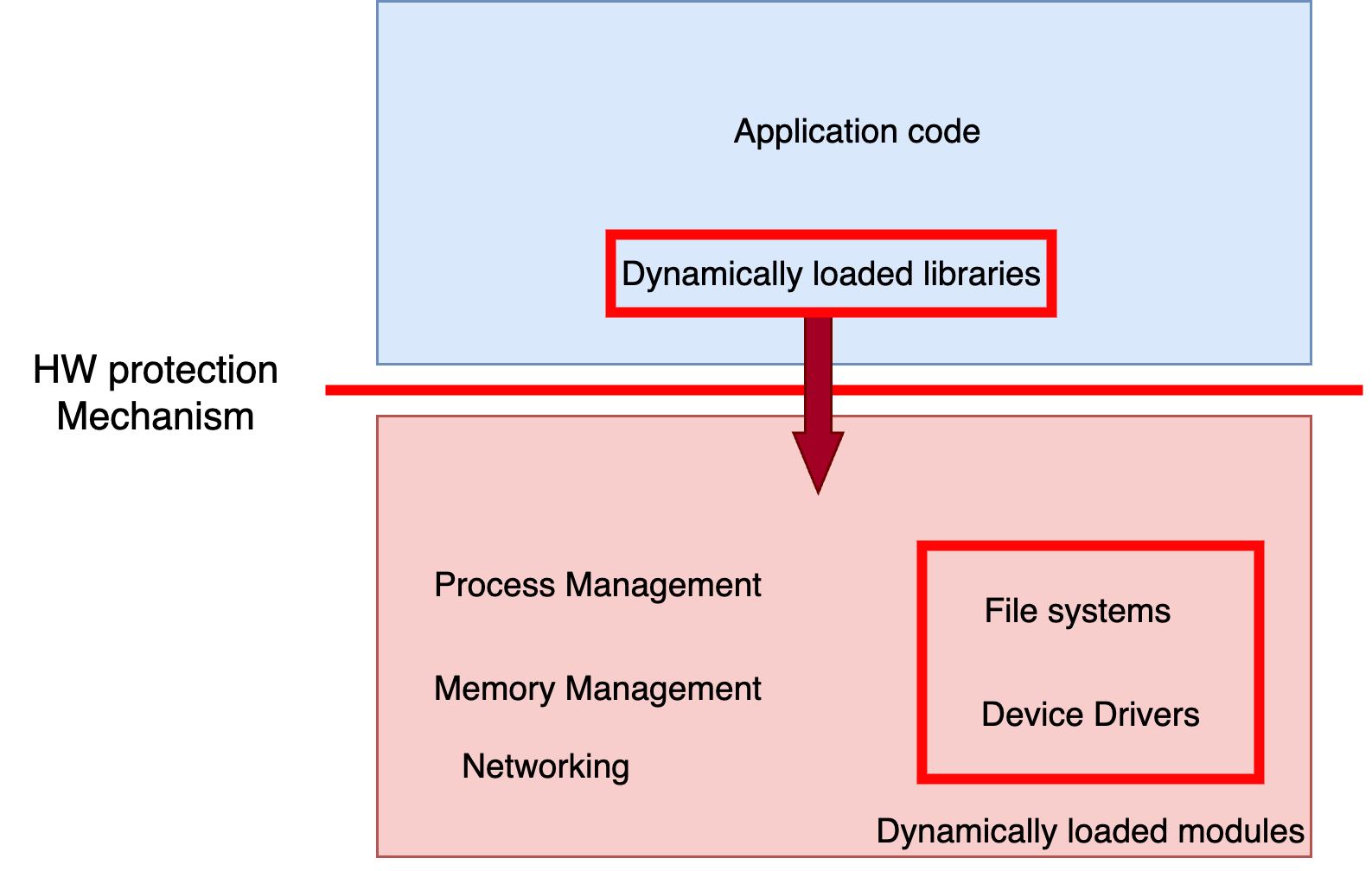

Fig. 3.1 What’s in a kernel#

As stated earlier, operating systems provide a set of virtual abstractions on top of the primitive capabilities provided by hardware. Most operating systems today, and Linux in particular, provide these abstractions in the OS kernel (see Fig. 3.1). The kernel runs at the highest permission level in the hardware, and can access any memory or device. All untrusted application code runs in processes, where any access to the real computer is controlled by the kernel.

The kernel controls the computer and provides the abstraction of processes for application code.

You can think of a process as a virtual computer that uses unprivileged computer instructions and a set of abstractions provided by the kernel. The kernel provides each process: 1) an abstraction of an isolated CPU (while multiplexing it between different processes), 2) a virtual memory abstraction of massive contiguous memory that starts at address 0x0, and 3) a set of file abstractions that allow the process to persist data and communicate with other processes.

Fig. 3.2 The kernel controls the computer and provides the abstraction of processes for application code.#

Only the kernel can execute the privileged instructions that, for example, control the memory that a process can see, switch which process is currently running on a CPU, or control the I/O devices attached to the computer. While processes can only execute unpriviledged instructions, the kernel adds logical instructions to the process, called system calls, that can be used to create new processes, communicate with other processes/virtual computers, allocate memory, etc… These system calls are really special procedure calls that happen to switch to the kernel, and the process will stop executing until the kernel returns from the system call.

An interrupt is a signal that can be raised by I/O and other devices like timers that causes the CPU to start executing kernel code at some well-defined location. They are used to allow the kernel to respond in a timely fashion to I/O operations; even if an application is running for a long time the interrupt will stop the process and start executing the kernel, enabling it to notice that something has happened and to deal with it. Also, the kernel can schedule timer interrupts to enable it to gain back control every so often from processes to, for example, let other processes run.

Just as an interrupt informs the kernel that something has happened, the kernel provides a virtual abstraction called signals to let a process know that something has happened, where a signal causes the CPU to start executing at a handler specified by the application. This can be used, for example, to enable asynchronous I/O, to let a process know that other processes have died, etc…

Fig. 3.3 Core services#

The different core services provided by the kernel (see Fig. 3.3) multiplex the resources of the computer in time and space. They maintain information about the running processes, schedule the processor, manage memory, implement file systems, and enable communication with other processes both on the same computer and, over the network, to other computers. A large part of the operating system is device drivers, which implement code specific to a particular type of hardware, and are often provided by the hardware developer.

As shown in Fig. 3.3, large portions of the kernel are dynamically loaded. This allows new devices to be attached to an already running kernel, e.g., if you plug a portable drive into your PC. When the kernel starts up, it discovers all the devices that exist, and figures out what kind of device drivers and file systems it needs and then gets them. Similarly, large portions of the applications are dynamically loaded libraries. Unless your application is really complicated, probably 90% of the code is actually libraries, and those libraries are loaded into the process by the operating system at run time to ensure that the most recent version of the library is used, and to allow all that memory used by library code to be shared across all the processes running on the computer.