Memory reclaiming algorithms.

Contents

This chapter describes various page replacement algorithms and how the referenced and dirty bits in the PTE are used.

14.4. Memory reclaiming algorithms.#

Global page reclaiming

FIFO, LRU, NRU…

Local page reclaiming

Reclaiming within a specific address space

Working set page reclaiming

14.4.1. The Memory Hierarchy#

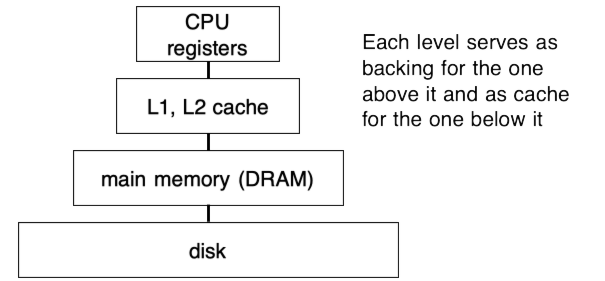

Demand paging from files and from swap provides the mechanisms to create the traditional memory hierarchy, as shown in Fig. 14.15.

Fig. 14.15 4-level Memory Hierarchy#

To access address A:

1.) If it’s not in the cache, then the old cache line is evicted, and A is loaded into the resulting empty cache line, this is done in hardware.

2.) If it’s not in memory, then the old page is evicted, and the page containing A is loaded into the resulting empty page, this is done in software.

3.) This page is mapped into the faulting virtual page via the page table entry, this is done in software.

In general, this works because of locality: when a cache line is brought in from memory, a page is loaded into in memory from disk, etc., it tends to get accessed multiple times before eviction.

Decades ago this was used to run programs much bigger than physical memory—CPUs were slow and disks were almost as fast as they are today, so the relative overhead of paging infrequently-used data to disk was low. Today’s CPUs are thousands of times faster, while disks are only a few times faster, and virtual memory doesn’t seem like such a great idea anymore. However it still gets used, even on desktop and laptop systems, to “steal” memory from idle programs: if you leave a large program like Chrome or Microsoft Word idle for half an hour while you use another memory-hungry program, memory will be released from the idle process and given to the active one; if you switch back, the original program will run slowly for a while as it swaps these pages back in.

14.4.2. Dirty and Clean Pages#

How does the operating system determine whether a page has been modified and needs to be written to disk? It uses the D bit in the page table entry for this, as seen in Fig. 14.4. When a page is mapped in the page table, the D bit in the PTE is set to zero; when the CPU writes to a page with D = 0, the MMU re-writes the page table entry with D = 1. When the OS decides to evict a page, the D bit tells it whether the page is “clean,” i.e., it hasn’t been modified, or whether it is “dirty” and has to be written back to disk.

When the OS is paging in from a file (e.g. executable code), it is straightforward to find the data to read in, as there is a direct mapping between a range of pages in a specific file and corresponding pages in the virtual memory space. This correspondence can easily be stored in the definition of that virtual address segment. When pages are saved to swap space this doesn’t work, however, as the locations they are saved to are allocated dynamically and fairly arbitrarily.

This problem is solved by using the page table itself. After evicting a page, its page table entry is invalidated by setting P = 0; however, the other 31 bits of the entry are ignored by the MMU. These bits are used to store the location of the page in swap space, so it can be found later later at page fault time. Thus, the page table entry does dual duty: when the page is present it points to the physical page itself, and is interpreted by the MMU; otherwise, it points to a location in swap space, and is ignored by the MMU and used by the software page fault handler.

14.4.3. Page Replacement#

If there’s a limited amount of memory available, then every time a page is swapped in from disk, it will be necessary to remove, or evict, another page from memory. The choice of which page to evict is important: the best page to choose would be one that won’t be needed anymore, while the worst page to evict would be one of the next to be used. (in that case, paging it back in would force another page to be evicted, and the work of paging it out and back in again would be wasted.) In fact, replacement of items in a cache is a general problem in computer systems; examples include:

Cache line replacement in the hardware CPU cache

Entry replacement in the TLB

Buffer replacement in a file system buffer pool

Page replacement in virtual memory

The page replacement problem can be stated in abstract form:

Given the following:

A disk holding \(d\) (virtual) pages, with virtual addresses \(0,\ldots d-1\);

A memory \({M}\) consisting of \(m\) (physical) pages, where each page is either empty or holds one of the \(d\) virtual pages, and

An access pattern \(a_1, a_2, a_3, \cdots\) where each \(a_i\) is a virtual address in the range \((0,d-1)\):

a demand-paging strategy is an algorithm which for each access \(a_i\) does the following:

If \(a_i\) is already in one of the \(m\) physical pages in \({M}\) (i.e. a hit): do nothing

Otherwise (a miss) it must:

Select a physical page \(j\) in \({M}\) (holding some virtual address \(M_j\)) and evict it, then

Fetch virtual page \(a_i\) from disk into physical page \(j\)

In other words it only fetches page \(j\) on demand—i.e. in response to a request for it.

14.4.4. Page Replacement Strategies#

In this class we consider the following page replacement strategies:

FIFO: first-in first-out. The page evicted from memory is the first page to have been fetched into memory.

LRU: least-recently used. Here, accesses to each page are tracked after it has been loaded into memory, and the least-recently-used page is evicted (unsurprisingly, given the name of the strategy).

OPT: this is the optimal demand-paged strategy, which is simple but impossible to implement, since it requires knowledge of the future. It’s examined because it provides a way of telling how well a real replacement strategy is performing—is it close to OPT, or is it far worse?

14.4.4.1. FIFO#

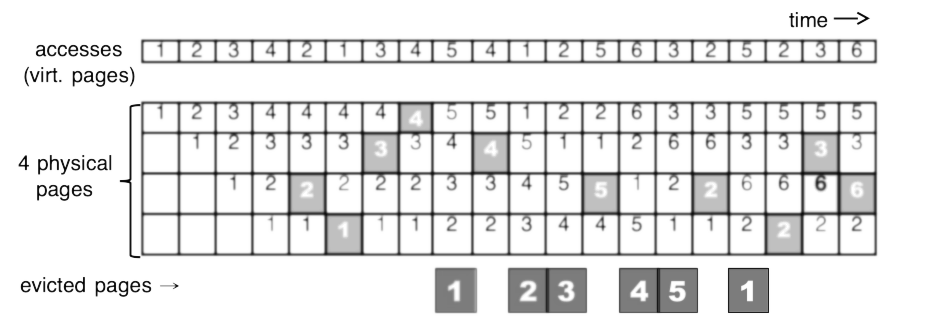

Fig. 14.16 Example of first-in-first-out (FIFO) page replacement#

This strategy is very simple to implement, as it only requires keeping track of the order in which pages were fetched into memory. Given 4 pages in physical memory, and the following access pattern:

1 2 3 4 2 1 3 4 5 4 1 2 5 6 3 2 5 2 3 6

The contents of memory after each access is shown in Fig. 14.16, with hits shown in light grey and pages evicted (when misses occur) shown in dark grey.

14.4.4.2. LRU#

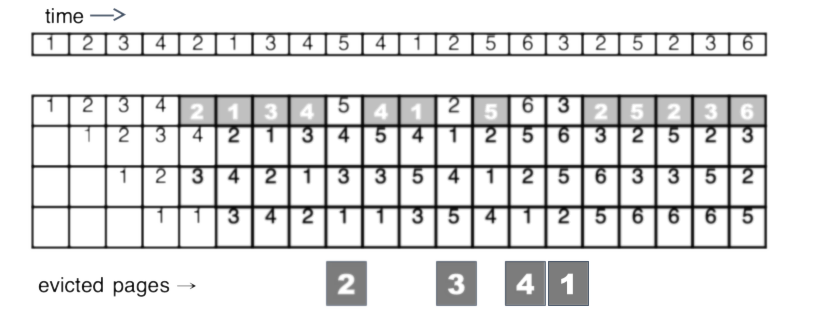

Fig. 14.17 Example of least-recently-used (LRU) page replacement#

The idea behind LRU is that pages which have been accessed in the recent past are likely to be accessed in the near future, and pages which haven’t, aren’t. LRU replacement is shown in Fig. 14.17.

To make the operation of the LRU algorithm more clear, on each hit, the accessed page is moved to the top of the column. (This is how LRU is typically implemented in software: elements are kept in a list, and on access, an element is removed and reinserted at the front of the list. The least-recently-used element may then be found by taking the tail of the list) Although this is a small example, a performance improvement is noted, with four misses compared to six for FIFO.

14.4.4.3. OPT#

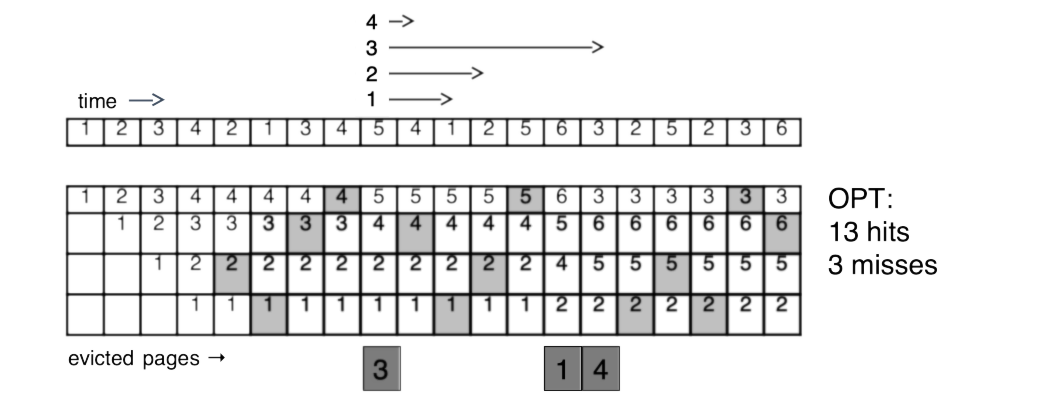

Fig. 14.18 Example of the optimal algorithm for page replacement#

The optimal algorithm picks a page to evict by looking forward in time and finding the page which goes for the longest time without being accessed again. Except for seeing the future, OPT plays by the same rules as other demand-paging algorithms: in particular, it can’t fetch a page until it is accessed. (That’s why the OPT strategy still has misses.) OPT is shown in Fig. 14.18, using the same access pattern as before. The first eviction decision is shown graphically: pages 4, 2, and 1 are accessed 1, 3, and 2 steps in the future, respectively, while page 3 isn’t accessed for 6 steps and is thus chosen to be evicted.

14.4.4.4. FIFO with Second Chance (CLOCK)#

Fig. 14.19 Example of FIFO with second chance#

LRU is simple and quite effective in many caching applications, and it’s ideal that the operating system uses it to determine which pages to evict from memory. But there is one small problem in using it in a virtual memory system: in this case, a “miss” corresponds to a page fault and fetching a page from disk, while a “hit” is when the page is already mapped in memory and the access succeeds in hardware. This means that once a page is faulted into memory, any further use of that page is “invisible” to the operating system. If the OS doesn’t know when a page was last used, it can’t implement the Least-Recently-Used replacement strategy.

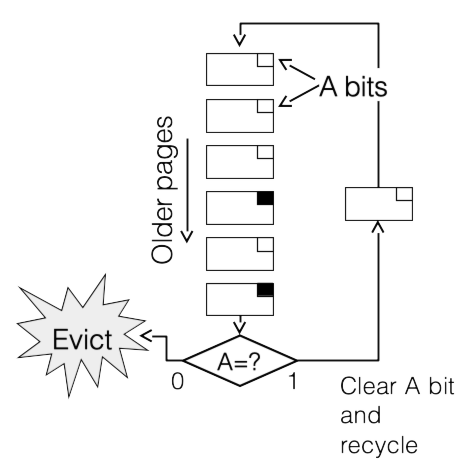

Despite this issue, it’s still possible to do better than FIFO by using the A (“accessed”) bit in the page table entry, which indicates whether the page has been accessed since the last time the bit was cleared[^18]. In Fig. 14.19 we see an algorithm called “FIFO with second chance,” where the A bit is used to determine whether a page has been accessed while it was in the FIFO queue. If the A bit is 1, the replacement algorithm clears it and re-writes the page table entry, and the page is given “another chance,” i.e., it is cycled back to the head of the list. If the A bit is 0, then there have been no accesses to the page during its entire trip through the list, and so it is selected for replacement.

14.4.4.5. CLOCK#

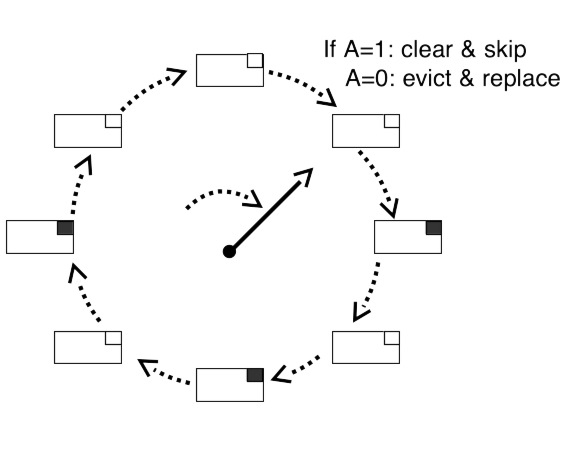

Fig. 14.20 Example of the CLOCK algorithm for page replacement#

An alternate way of visualizing the FIFO with second chance algorithm is shown in Fig. 14.20. Pages are arranged in a circle, with a “hand” advancing around the circle testing pages and determining whether to keep or evict them. This description is the origin of the widely-used name for this algorithm, CLOCK.